VentureBeat: Consilient, UCSF Health, Intel deploy confidential computing to safeguard data in use

This article originally appeared in VentureBeat.

Confidential computing and privacy-preserving analytics are relatively new terms in the tech world. Yet they’ve continued to gain wider interest as a way to solve the complex challenges of protecting and securing data in use and algorithm code bases on secure public clouds, at the edge, and in data centers — especially for large compute requirements around artificial intelligence.

This emerging approach provides a secure, hardware-based platform (confidential computing) for multiple parties to collaborate, allowing an algorithm to compute on sensitive data (via privacy preserving analytics) without exposing either to the other party. Together, the technologies support secure enclaves, virtual machines, databases, and more. Interest has been strong, especially in health care, finance, and government.

Two early-adopter examples show the possibilities: In health care, the University of California San Francisco (UCSF) is leveraging its BeeKeeperAI Zero Trust solution to accelerate the validation of clinical-grade AI algorithms that can safely and consistently perform at the point of care. This markedly reduces time and cost, while addressing data security concerns using the latest confidential computing solutions. Consilient’s next-generation, AI-based system fights money laundering and the financing of terrorism, increasing fraud detection by more than 75%. Both cases illustrate how confidential computing can enable collaboration while preserving privacy and regulatory compliance.

UCSF: Accelerating better clinical outcomes and equitable access

Unique challenges face developers of health care AI aimed at improving clinical outcomes and workflow efficiencies. UCSF’s Center for Digital Health Innovation (CDHI) experienced these first-hand with partner GE Healthcare in their co-development of the industry’s first regulatory-cleared, on-device health care AI solution.

To ensure equitable care for all patients, health care AI applications are required by regulators to be ethnically, clinically, and geographically agnostic. Validating AI models that perform consistently across multiple variables, including race, workflows, and treatment settings requires timely access to highly diverse, non-biased data.

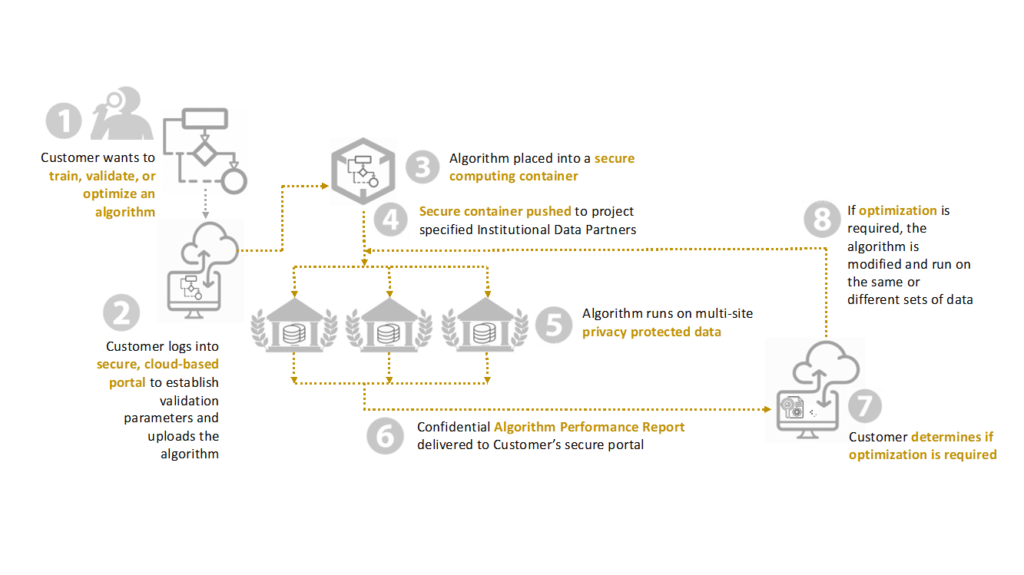

UCSF’s BeeKeeperAI Zero Trust platform helps accelerate health care AI by applying privacy-preserving computing to protected, harmonized clinical and health data from multiple institutions. In its proof of concept, BeeKeeperAI leveraged Intel Software Guard Extensions (SGX) and Fortanix’s confidential computing platform running in Microsoft Azure CCE cloud to enable several key protections:

- Data is never moved, shared, or seen. BeeKeeperAI uses secure computing enclave technology that lets an AI algorithm validate on a protected data set resulting in a performance report. Data does not leave the enclave and is never visible to the algorithm developer.

- Algorithm IP is protected. BeeKeeperAI lets the algorithm developer submit an encrypted, containerized AI model to a secure enclave running in the cloud infrastructure of the data owner. The Fortanix software stack manages keys and workflow to submit the encrypted container to the SGX secure enclave on Azure. The data owner can never see the algorithm inside the container.

- The algorithm developer only receives a validation report. BeeKeeperAI’s validation report provides statistics on the sensitivity and specificity of the algorithm’s performance.These high-level statistics can’t be used to infer anything about the data on which the algorithm was validated.

- An encrypted artifact is stored for regulatory purposes. The artifact contains encrypted documentary information about the validation results.

Taken together, these security measures help health care organizations protect their data while accelerating the research and development of clinical AI to improve patient care and operational efficiencies.

BeeKeeperAI accelerates the development of health care AI by supporting single-source contracting and standardization — across multiple academic medical institutions — of arms-length harmonization and transformation of data, which remains in the secure control of the data owner at all times.

Identifying patients for retinopathy treatment

BeeKeeperAI technology is being employed to create a diagnostic screening tool for diabetic retinopathy, the primary cause of blindness in diabetic patients. AiVision’s Olyatis solution uses artificial intelligence algorithms to read images of the eye fundus (the part opposite the pupil).

Here’s how it will work: During Phase One collaboration, the BeeKeeperAI confidential computing enclave, powered by Fortanix, will be deployed into the UCSF HIPAA-compliant Azure environment. There, secured by Intel’s SGX technology, UCSF will place an encrypted fundus photo data set into a secured container. AiVision will place the encrypted algorithm into a secure container. Upon mutual agreement, the Olyatis model will move into UCSF’s secure container, where it will be run on the encrypted photo data set.The resulting output will be a validation report documenting the sensitivity and specificity of the algorithm’s performance on the encrypted data. Throughout this process, neither data or algorithm will be visible to one another.

Consilient: Fighting financial crime, 21st century style

Every year, financial institutions and governments around the globe spend billions on anti-money laundering and countering the financing of terrorism (efforts known in the industry as AML/CFT). Unfortunately, experts say the bad actors are winning. The UN estimates that trillions of dollars are laundered every year, roughly 2-5% of worldwide GDP. One study found compliance costs are a hundred times greater than recovered criminal funds.

“The current global AML/CFT system is an outdated model that requires a new 21st-century design,” says Juan Zarate, co-founder and chairman of Consilient, a fintech company launched in October. The first-ever Assistant Secretary of the U.S. Treasury for terrorist financing and financial crimes, and global co-managing partner and chief strategy officer at K2 Integrity, Zarate says Consilient is reinventing today’s costly and ineffectual system with “revolutionary” next-generation architecture, governance, and analytics.

Consilient’s Dozer technology uses transfer-learning, so that models can be trained across multiple sets of training data, allowing financial institutions to collaborate without putting private data at risk. This secure computing uses Intel SGX technology, which uses a hardware-based Trusted Execution Environment (TEE) to help isolate and protect specific application code and data in memory.

The company says its “leapfrog” approach lets financial institutions, authorities, and other regulated actors discover and manage evolving and complicated illicit risks more proactively, effectively, and efficiently.

Safely spanning silos

Consilient helps overcome one of the biggest challenges hamstringing financial crime fighters: sharing information among siloed institutions. Today’s reactive system relies on individual institutions to detect and report suspicious activity on their own, explains Zarate. Policy and operational concerns — especially data privacy and security — inhibit information sharing, dynamic feedback, or collective learning, both within and outside the institution. The resulting blind spots make it difficult to identify illicit activity in “island” systems and economies. This paved the way for a behavioral-based, ML-driven governance model that allows its algorithm to access and interrogate data sets in different institutions, databases, and even jurisdictions — without ever moving the data.

Federated analytics improve accuracy

To make this work, Consilient employs federated learning, a model for distributed machine learning across large and/or diverse datasets. The algorithm, not data, is exchanged between servers at different institutions, enabling it to detect illicit activity more accurately within their networks. Secure, private processing and analysis take place within a protected memory enclave created by Intel SGX built into Xeon processors. This “black box” approach eliminates the need to create complex webs of trust, where data or code could still be exposed to an untrusted party. It facilitates cross-industry machine learning, while still helping to maintain the privacy of individual data and the confidentiality of proprietary algorithms.

Consilient can analyze, identify, and find “normal” and “abnormal” patterns in datasets that human eyes and most current technologies cannot see. Traditional rule-based screening and AML/CFT systems typically have a false-positive rate exceeding 95%. Self-learning Dozer technology has proven to reduce the false-positive rate to 12%.

Industry takes notice

With Consilient, government and financial institutions can detect enormous amounts of illicit activity quickly, with far greater accuracy. The company says this capability will help organizations save costs, redeploy personnel, and more effectively prioritize efforts to counter illicit finance.

Industry has taken notice; several banks are evaluating the Consilient solution. “Identifying and disrupting the financial networks of criminal enterprises is a top priority for our member banks,” says Greg Baer, president and CEO of the Banking Policy Institute. “This promising technology presents new opportunities to more effectively identify illicit financing at the source.”

Go deeper:

Confidential Computing with Intel Software Guard Extensions (video)

Federated Learning through Revolutionary Technology (white paper)

Why Intel believes confidential computing will boost AI and machine learning (article)